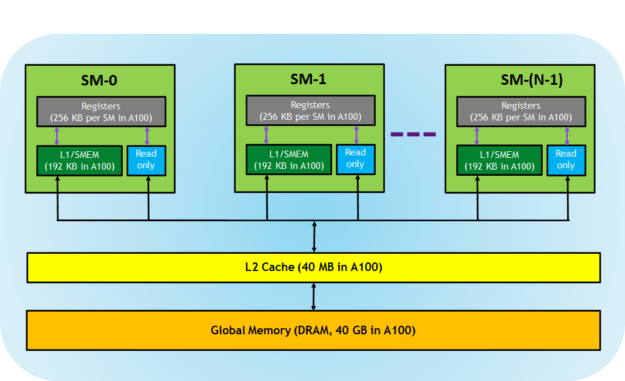

The challenge is to develop application software that transparently scales its parallelism to leverage the increasing number of processor cores, much as 3D graphics applications transparently scale their parallelism to manycore GPUs with widely varying numbers of cores. Furthermore, their parallelism continues to scale with Moore's law. The advent of multicore CPUs and manycore GPUs means that mainstream processor chips are now parallel systems. In fact, many algorithms outside the field of image rendering and processing are accelerated by data-parallel processing, from general signal processing or physics simulation to computational finance or computational biology. Similarly, image and media processing applications such as post-processing of rendered images, video encoding and decoding, image scaling, stereo vision, and pattern recognition can map image blocks and pixels to parallel processing threads. In 3D rendering, large sets of pixels and vertices are mapped to parallel threads. Many applications that process large data sets can use a data-parallel programming model to speed up the computations. Because the same program is executed for each data element, there is a lower requirement for sophisticated flow control, and because it is executed on many data elements and has high arithmetic intensity, the memory access latency can be hidden with calculations instead of big data caches.ĭata-parallel processing maps data elements to parallel processing threads. More specifically, the GPU is especially well-suited to address problems that can be expressed as data-parallel computations - the same program is executed on many data elements in parallel - with high arithmetic intensity - the ratio of arithmetic operations to memory operations. The GPU Devotes More Transistors to Data Processing

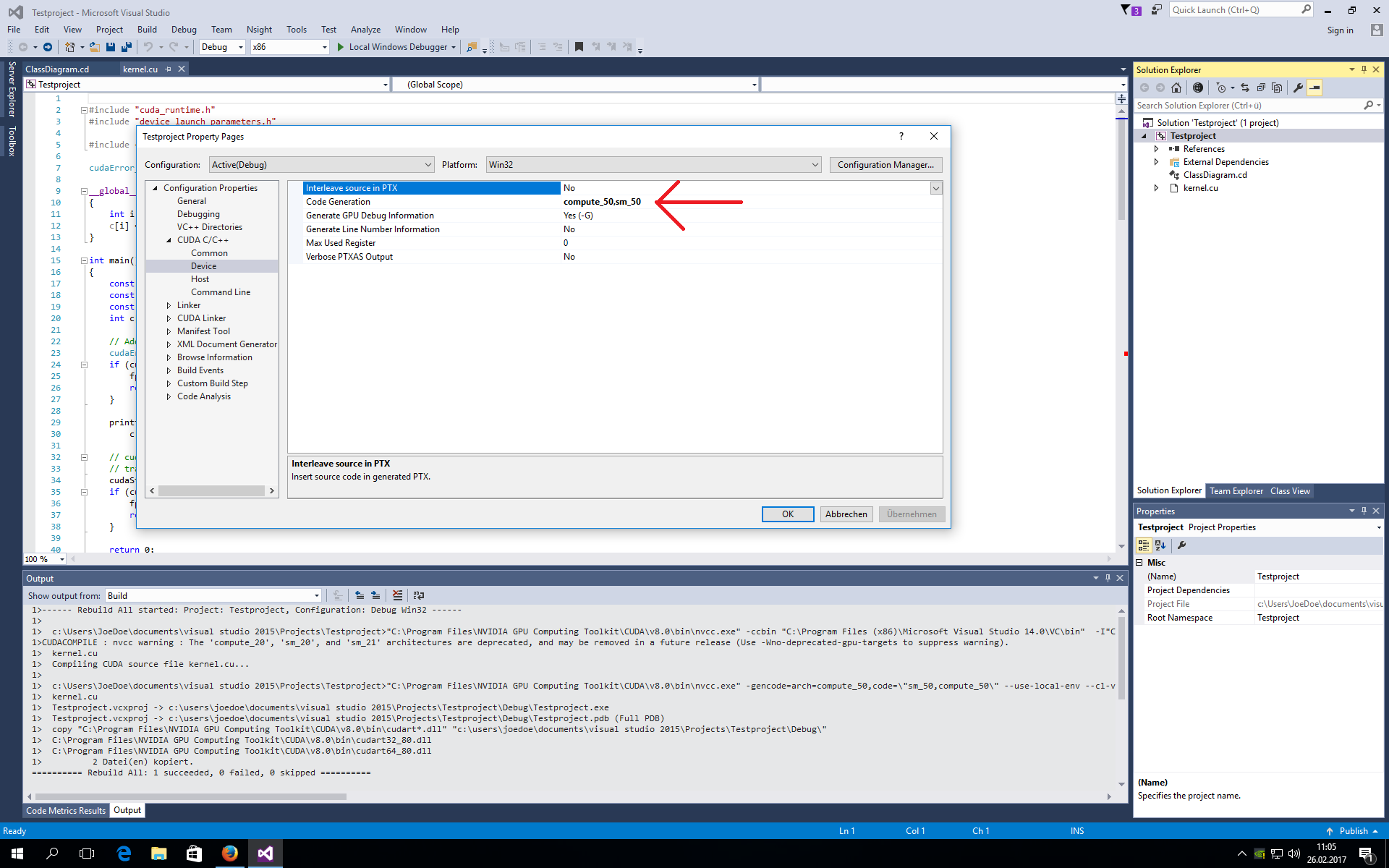

#CUDA DIM3 3DIMENSION COMPUTE 5.0 CODE#

Added new section C++11 Language Features,.

0 kommentar(er)

0 kommentar(er)